| Citation: | Zhou CD, Huang Y, Yang YG et al. Streamlined photonic reservoir computer with augmented memory capabilities. Opto-Electron Adv 8, 240135 (2025). doi: 10.29026/oea.2025.240135 |

Streamlined photonic reservoir computer with augmented memory capabilities

-

Abstract

Photonic platforms are gradually emerging as a promising option to encounter the ever-growing demand for artificial intelligence, among which photonic time-delay reservoir computing (TDRC) is widely anticipated. While such a computing paradigm can only employ a single photonic device as the nonlinear node for data processing, the performance highly relies on the fading memory provided by the delay feedback loop (FL), which sets a restriction on the extensibility of physical implementation, especially for highly integrated chips. Here, we present a simplified photonic scheme for more flexible parameter configurations leveraging the designed quasi-convolution coding (QC), which completely gets rid of the dependence on FL. Unlike delay-based TDRC, encoded data in QC-based RC (QRC) enables temporal feature extraction, facilitating augmented memory capabilities. Thus, our proposed QRC is enabled to deal with time-related tasks or sequential data without the implementation of FL. Furthermore, we can implement this hardware with a low-power, easily integrable vertical-cavity surface-emitting laser for high-performance parallel processing. We illustrate the concept validation through simulation and experimental comparison of QRC and TDRC, wherein the simpler-structured QRC outperforms across various benchmark tasks. Our results may underscore an auspicious solution for the hardware implementation of deep neural networks. -

-

References

[1] LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 521, 436–444 (2015). doi: 10.1038/nature14539 [2] Saetchnikov AV, Tcherniavskaia EA, Saetchnikov VA et al. Deep-learning powered whispering gallery mode sensor based on multiplexed imaging at fixed frequency. Opto-Electron Adv 3, 200048 (2020). doi: 10.29026/oea.2020.200048 [3] Silver D, Huang A, Maddison CJ et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016). doi: 10.1038/nature16961 [4] Jumper J, Evans R, Pritzel A et al. Highly accurate protein structure prediction with alphafold. Nature 596, 583–589 (2021). doi: 10.1038/s41586-021-03819-2 [5] Thompson NC, Greenewald K, Lee K et al. The computational limits of deep learning. arXiv preprint arXiv: 2007.05558, 2020. https://ar5iv.labs.arxiv.org/html/2007.05558 [6] Yao P, Wu HQ, Gao B et al. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020). doi: 10.1038/s41586-020-1942-4 [7] Zhong YN, Tang JS, Li XY et al. A memristor-based analogue reservoir computing system for real-time and power-efficient signal processing. Nat Electron 5, 672–681 (2022). doi: 10.1038/s41928-022-00838-3 [8] Chu M, Kim B, Park S et al. Neuromorphic hardware system for visual pattern recognition with memristor array and CMOS neuron. IEEE Trans Ind Electron 62, 2410–2419 (2015). [9] Torrejon J, Riou M, Araujo FA et al. Neuromorphic computing with nanoscale spintronic oscillators. Nature 547, 428–431 (2017). doi: 10.1038/nature23011 [10] Romera M, Talatchian P, Tsunegi S et al. Vowel recognition with four coupled spin-torque nano-oscillators. Nature 563, 230–234 (2018). doi: 10.1038/s41586-018-0632-y [11] Grollier J, Querlioz D, Camsari KY et al. Neuromorphic spintronics. Nat Electron 3, 360–370 (2020). doi: 10.1038/s41928-019-0360-9 [12] Ashtiani F, Geers AJ, Aflatouni F. An on-chip photonic deep neural network for image classification. Nature 606, 501–506 (2022). doi: 10.1038/s41586-022-04714-0 [13] Xiang SY, Shi YC, Zhang YH et al. Photonic integrated neuro-synaptic core for convolutional spiking neural network. Opto-Electron Adv 6, 230140 (2023). doi: 10.29026/oea.2023.230140 [14] Shastri BJ, Tait AN, De Lima TF et al. Photonics for artificial intelligence and neuromorphic computing. Nat Photonics 15, 102–114 (2021). doi: 10.1038/s41566-020-00754-y [15] Qi HX, Du ZC, Hu XY et al. High performance integrated photonic circuit based on inverse design method. Opto-Electron Adv 5, 210061 (2022). doi: 10.29026/oea.2022.210061 [16] Hamerly R, Bernstein L, Sludds A et al. Large-scale optical neural networks based on photoelectric multiplication. Phys Rev X 9, 021032 (2019). [17] Nakajima M, Inoue K, Tanaka K et al. Physical deep learning with biologically inspired training method: gradient-free approach for physical hardware. Nat Commun 13, 7847 (2022). doi: 10.1038/s41467-022-35216-2 [18] Marković D, Mizrahi A, Querlioz D et al. Physics for neuromorphic computing. Nat Rev Phys 2, 499–510 (2020). doi: 10.1038/s42254-020-0208-2 [19] Wright LG, Onodera T, Stein MM et al. Deep physical neural networks trained with backpropagation. Nature 601, 549–555 (2022). doi: 10.1038/s41586-021-04223-6 [20] Wang XZ, Cichos F. Harnessing synthetic active particles for physical reservoir computing. Nat Commun 15, 774 (2024). doi: 10.1038/s41467-024-44856-5 [21] Yaremkevich DD, Scherbakov AV, De Clerk L et al. On-chip phonon-magnon reservoir for neuromorphic computing. Nat Commun 14, 8296 (2023). doi: 10.1038/s41467-023-43891-y [22] Xu XY, Tan MX, Corcoran B et al. 11 tops photonic convolutional accelerator for optical neural networks. Nature 589, 44–51 (2021). doi: 10.1038/s41586-020-03063-0 [23] Li CH, Du W, Huang YX et al. Photonic synapses with ultralow energy consumption for artificial visual perception and brain storage. Opto-Electron Adv 5, 210069 (2022). [24] Huang CR, Sorger VJ, Miscuglio M et al. Prospects and applications of photonic neural networks. Adv Phys X 7, 1981155 (2022). [25] Goodfellow I, Bengio Y, Courville A. Deep Learning (MIT, Cambridge, 2016). [26] Jaeger H. The “echo state” approach to analyzing and training recurrent neural networks (German National Research Center for Information Technology, Bonn, 2001). [27] Maass W, Natschläger T, Markram H. Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput 14, 2531–2560 (2002). doi: 10.1162/089976602760407955 [28] Appeltant L, Soriano MC, Van der Sande G et al. Information processing using a single dynamical node as complex system. Nat Commun 2, 468 (2011). doi: 10.1038/ncomms1476 [29] Van der Sande G, Brunner D, Soriano MC. Advances in photonic reservoir computing. Nanophotonics 6, 561–576 (2017). doi: 10.1515/nanoph-2016-0132 [30] Gauthier DJ, Bollt E, Griffith A et al. Next generation reservoir computing. Nat Commun 12, 5564 (2021). doi: 10.1038/s41467-021-25801-2 [31] Barbosa WAS, Gauthier DJ. Learning spatiotemporal chaos using next-generation reservoir computing. Chaos 32, 093137 (2022). doi: 10.1063/5.0098707 [32] Ortín S, Soriano MC, Pesquera L et al. A unified framework for reservoir computing and extreme learning machines based on a single time-delayed neuron. Sci Rep 5, 14945 (2015). doi: 10.1038/srep14945 [33] Huang GB, Zhu QY, Siew CK. Extreme learning machine: theory and applications. Neurocomputing 70, 489–501 (2006). doi: 10.1016/j.neucom.2005.12.126 [34] Estébanez I, Li S, Schwind J et al. 56 GBaud PAM-4 100 Km transmission system with photonic processing schemes. J Lightwave Technol 40, 55–62 (2022). doi: 10.1109/JLT.2021.3117921 [35] Skontranis M, Sarantoglou G, Sozos K et al. Multimode Fabry-Perot laser as a reservoir computing and extreme learning machine photonic accelerator. Neuromorph Comput Eng 3, 044003 (2023). doi: 10.1088/2634-4386/ad025b [36] Pierangeli D, Marcucci G, Conti C. Photonic extreme learning machine by free-space optical propagation. Photonics Res 9, 1446–1454 (2021). doi: 10.1364/PRJ.423531 [37] Takano K, Sugano C, Inubushi M et al. Compact reservoir computing with a photonic integrated circuit. Opt Express 26, 29424–29439 (2018). doi: 10.1364/OE.26.029424 [38] Zeng QQ, Wu ZM, Yue DZ et al. Performance optimization of a reservoir computing system based on a solitary semiconductor laser under electrical-message injection. Appl Opt 59, 6932–6938 (2020). doi: 10.1364/AO.394999 [39] Phang S. Photonic reservoir computing enabled by stimulated Brillouin scattering. Opt Express 31, 22061–22074 (2023). doi: 10.1364/OE.489057 [40] Zhang JF, Ma BW, Zou WW. High-speed parallel processing with photonic feedforward reservoir computing. Opt Express 31, 43920–43933 (2023). doi: 10.1364/OE.505520 [41] Guo XX, Xiang SY, Zhang YH et al. Polarization multiplexing reservoir computing based on a VCSEL with polarized optical feedback. IEEE J Sel Top Quantum Electron 26, 1700109 (2020). [42] Squire LR. Memory and Brain (Oxford University Press, New York, 1987). [43] Gu JX, Wang ZH, Kuen J et al. Recent advances in convolutional neural networks. Pattern Recognit 77, 354–377 (2018). doi: 10.1016/j.patcog.2017.10.013 [44] Goldman MS. Memory without feedback in a neural network. Neuron 61, 621–634 (2009). doi: 10.1016/j.neuron.2008.12.012 [45] Lukoševičius M. A practical guide to applying echo state networks. In Montavon G, Orr GB, Müller KR. Neural Networks: Tricks of the Trade 659–686 (Springer, Berlin, 2012). [46] Lepri S, Giacomelli G, Politi A et al. High-dimensional chaos in delayed dynamical systems. Phys D Nonlinear Phenom 70, 235–249 (1994). doi: 10.1016/0167-2789(94)90016-7 [47] Martin-Regalado J, Prati F, Miguel MS et al. Polarization properties of vertical-cavity surface-emitting lasers. IEEE J Quantum Electron 33, 765–783 (1997). doi: 10.1109/3.572151 [48] Wang L, Wu ZM, Wu JG et al. Long-haul dual-channel bidirectional chaos communication based on polarization-resolved chaos synchronization between twin 1550 nM VCSELs subject to variable-polarization optical injection. Opt Commun 334, 214–221 (2015). doi: 10.1016/j.optcom.2014.08.041 [49] Nguimdo RM, Verschaffelt G, Danckaert J et al. Simultaneous computation of two independent tasks using reservoir computing based on a single photonic nonlinear node with optical feedback. IEEE Trans Neural Netw Learn Syst 26, 3301–3307 (2015). doi: 10.1109/TNNLS.2015.2404346 [50] Yang YG, Zhou P, Mu PH et al. Time-delayed reservoir computing based on an optically pumped spin VCSEL for high-speed processing. Nonlinear Dyn 107, 2619–2632 (2022). doi: 10.1007/s11071-021-07140-5 [51] Panajotov K, Sciamanna M, Arteaga MA et al. Optical feedback in vertical-cavity surface-emitting lasers. IEEE J Sel Top Quantum Electron 19, 1700312 (2013). doi: 10.1109/JSTQE.2012.2235060 [52] Bueno J, Brunner D, Soriano MC et al. Conditions for reservoir computing performance using semiconductor lasers with delayed optical feedback. Opt Express 25, 2401–2412 (2017). doi: 10.1364/OE.25.002401 [53] Larger L, Baylón-Fuentes A, Martinenghi R et al. High-speed photonic reservoir computing using a time-delay-based architecture: million words per second classification. Phys Rev X 7, 011015 (2017). [54] Shen YW, Li RQ, Liu GT et al. Deep photonic reservoir computing recurrent network. Optica 10, 1745–1751 (2023). doi: 10.1364/OPTICA.506635 [55] Lupo A, Picco E, Zajnulina M et al. Deep photonic reservoir computer based on frequency multiplexing with fully analog connection between layers. Optica 10, 1478–1485 (2023). doi: 10.1364/OPTICA.489501 -

Supplementary Information

Supplementary information for Streamlined photonic reservoir computer with augmented memory capabilities

-

Access History

Article Metrics

-

Figure 1.

Concept of QC and RC structures. (a−c) Design of the proposed QC, which has a similar operational criterion to convolutional coding in data processing, but meanwhile can extract features in the temporal dimension and provide memory capability. (d−f) Schematic diagram of different RC structures. The comparison of the nodes’ states between (d) Spatial RC, (e) TDRC and (f) proposed QRC verifies that the encoding data will provide the memory capability through QC. NL, nonlinear nodes.

-

Figure 2.

Schematic diagram of the TDRC and the QRC, as well as their physical implementation based on the VCSEL. (a) Schematic architectures of the TDRC and QRC based on the VCSEL. Experimental setup of (b) the TDRC and (c) the QRC. PC, polarization controller; Att, attenuator; MZM, Mach-Zehnder Modulator; DL, delay line; EDFA, erbium-doped fiber amplifier; OBPF, optical bandpass filter; PBS, polarization beam splitter; PD, photodetector; AWG, arbitrary waveform generator; OSA, optical spectrum analyzer; OSC, oscilloscope; Ch, channel. The blue (red) line represents the optical (electrical) connection. (d) Optical spectra of the VCSEL. The black line represents the optical spectra of the free-running VCSEL. The red line represents XM and YM separated by PBS and PC.

-

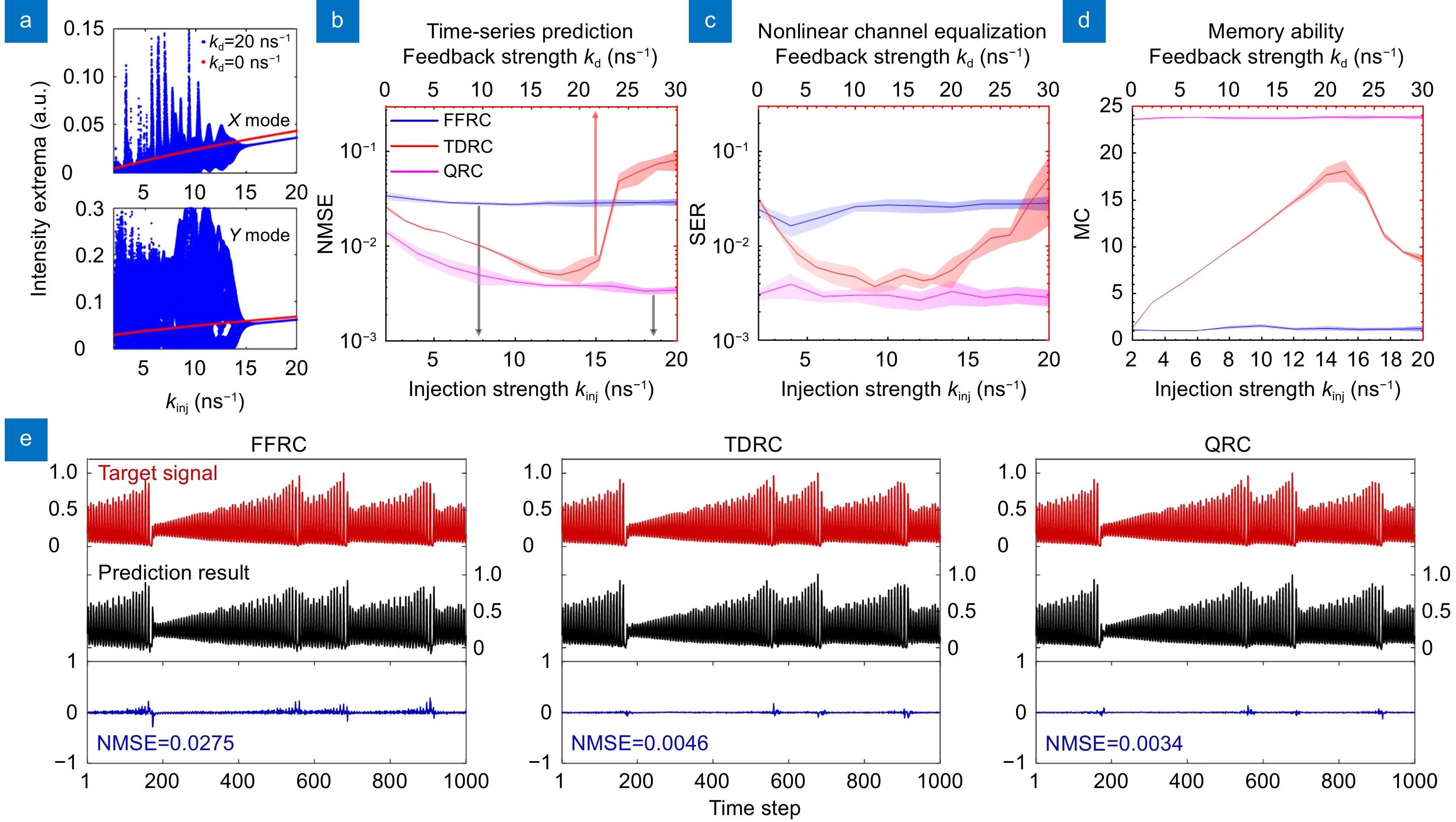

Figure 3.

(a) Bifurcation diagram with kinj as the control parameter of the VCSEL. In all panels, the extrema (maxima and minima) of the intensity time series are shown as dots. (b−d) showcase the performance difference of the FFRC, TDRC and QRC, based on the benchmark tasks motioned before. With Q=6 and β=1.6 in (b); Q=9 and β=0.9 in (c); Q=39 and β=0.7 in (d). The injection power of the TDRC is set at 20 ns−1. The detailed results of the chaotic time-series prediction are further demonstrated in (e), with kinj=20 ns−1 for FFRC; kinj=20 ns−1 and kd=18 ns−1 for TDRC; Q=6, β=1.6 and kinj=20 ns−1 for QRC. The target signal (red), prediction result (black), and error between them (blue) are shown.

-

Figure 4.

Two-dimensional maps of (a, d, g) NMSE, (b, e, h) SER, and (c, f, i) linear MC in the parameter space of kinj and Δf. (a–c), (d–f) and (g–i) showcase the results of the FFRC, the TDRC and the QRC, respectively. A darker color indicates a smaller value, while the opposite means a larger value. With Q=6 and β=1.6 in (g); Q=9 and β=0.9 in (h); Q=39 and β=0.7 in (i). The feedback strength of the TDRC is set at 18 ns−1, 12 ns−1, 21 ns−1 in (d, e, f), respectively. These results stem from the joint training of XM and YM.

-

Figure 5.

Analysis of the size of kernel Q and the step coefficient β. The (a) NMSE, (b) SER and (c) MC as a function of the kernel size Q, with kinj=20 ns−1 and β=0.8. The (d) NMSE, (e) SER and (f) MC as a function of step coefficient β, with kinj=20 ns−1 and Q=10. The original data and encoding data are injected in the XM and YM, respectively. Here, we use AF, which provides an additional nonlinear transformation for the output, to obtain the extended matrices [Vfx, Vfy]. The blue dashed lines illustrate the performance achieved by singularly injecting the original data into XM, and the red dashed lines represent the trained results of the optical injection terms with encoding signals.

-

Figure 6.

Two-dimensional maps of (a, d) NMSE, (b, e) SER, and (c, f) linear MC in the parameter space of Q and β. (a–c) and (d–f) showcase the results without and with AF, respectively. These results stem from the joint training of XM and YM. With kinj=20 ns−1 and Δf=0 GHz.

-

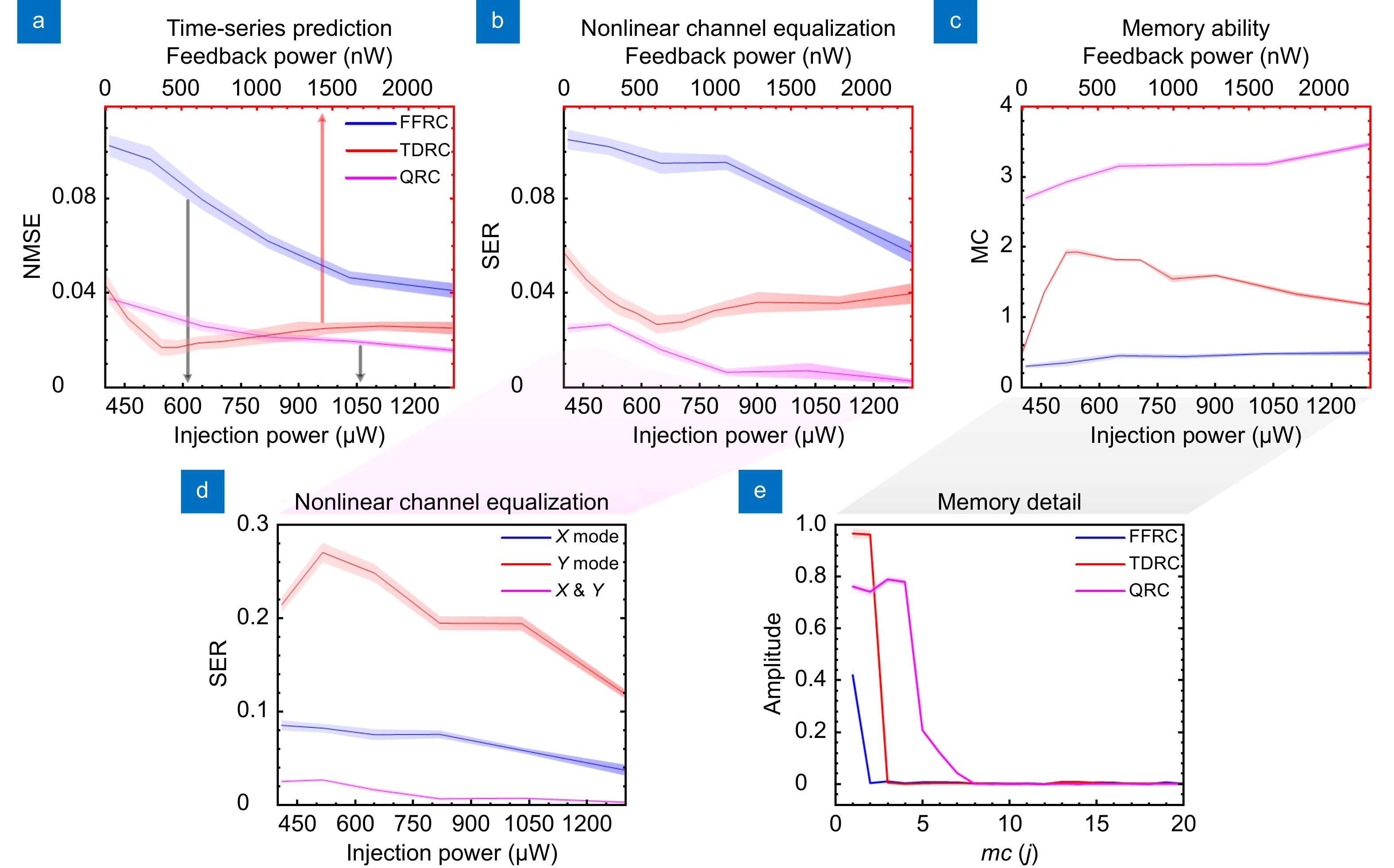

Figure 7.

The experimental performance comparison between the FFRC, the TDRC and the QRC on the (a) time-series prediction, (b) nonlinear channel equalization and (c) memory ability. With Q=6 and β=1.6 in (a); Q=9 and β=0.9 in (b); Q=39 and β=0.7 in (c). The performance of the QRC on nonlinear channel equalization is detailed in (d), while (e) depicts the three RCs’ memory details when kinj is 1297.2 μW in the FFRC, the QRC and 1292.4 μW in the TDRC.

E-mail Alert

E-mail Alert RSS

RSS

DownLoad:

DownLoad: