-

摘要:

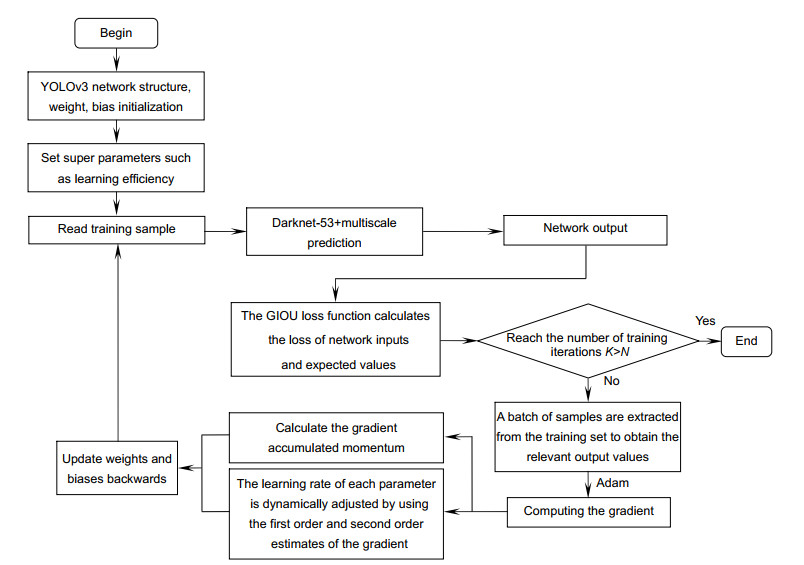

主流的目标检测网络在高质量RGB图像上的目标检测能力突出,但应用于分辨率低的红外图像上时目标检测性能则有比较明显的下降。为了提高复杂场景下的红外目标检测识别能力,本文采用了以下措施:第一、借鉴领域自适应的方法,采用合适的红外图像预处理手段,使红外图像更接近RGB图像,从而可以应用主流的目标检测网络进一步提高检测精度。第二、采用单阶段目标检测网络YOLOv3作为基础网络,并用GIOU损失函数代替原有的MSE损失函数。经实验验证,该算法在公开红外数据集FLIR上检测的准确率提升明显。第三、针对FLIR数据集存在的目标尺寸跨度大的问题,借鉴空间金字塔思想,加入SPP模块,丰富特征图的表达能力,扩大特征图的感受野。实验表明,所采用的方法可以进一步提高目标检测的精度。

Abstract:

Abstract:The mainstream target detection network has outstanding target detection capability in high quality RGB images, but for infrared images with poor resolution, the target detection performance decreases significantly. In order to improve the performance of infrared target detection in complex scene, the following measures are adopted in this paper: Firstly, by referring to the field adaption and adopting the appropriate infrared image preprocessing means, the infrared image is closer to the RGB image, so that the mainstream target detection network can further improve the detection accuracy. Secondly, based on the one-stage target detection network YOLOv3, the algorithm replaces the original MSE loss function with the GIOU loss function. It is verified by experiments that the detection accuracy on the open infrared data set the FLIR is significantly improved. Thirdly, in view of the problem of large target size span existing in FLIR dataset, the SPP module is added with reference to the idea of the spatial pyramid to enrich the expression ability of feature map, expand the receptive field of feature map, and further improve the accuracy of target detection.

-

Key words:

- infrared target detection /

- deep learning /

- complex scenario

-

Overview: In recent years, with the continuous development of computer vision, the ability of target detection based on deep learning has been significantly improved. However, most of the images used by mainstream target detection networks are RGB images, and there are few studies on the direction of infrared target detection. Moreover, the mainstream target detection network has a prominent target detection capability in high quality RGB images, but the target detection performance in infrared images with poor resolution is significantly reduced. Compared with infrared images, visible images have higher imaging resolution and rich target detail information. However, under certain weather conditions, the visible images cannot be obtained. Infrared imaging technology has the characteristics of long range, strong anti-interference ability, high measurement accuracy, not affected by weather, able to work day and night, and strong ability to penetrate smoke. Therefore, infrared imaging technology has been widely used once it was proposed. The demand for infrared target detection is also urgent.

In order to improve the performance of infrared target detection in complex scenes, the following measures are adopted in this paper: First, referring to the field adaptive method, appropriate infrared image preprocessing means are adopted to make the infrared image closer to the RGB image, so as to further improve the detection accuracy by applying the mainstream target detection network. Secondly, mean square error (MSE), a loss function, regards the coordinate value of each point of BBox as an independent variable, which does not consider the integrity of the target frame, and ln-norms is sensitive to the scale of the object, so the algorithm is based on the single-stage target detection network YOLOv3 and replaces the original MSE loss function with GIOU loss function. It is verified by experiments that the detection accuracy on FLIR, an open infrared data set, is significantly improved, and the problem of inaccurate location in the original network is effectively improved. Thirdly, in view of the problem of large span of target size in the FLIR data set, the SPP module is added to enrich the expression ability of feature map and expand the receptive field of feature map by referring to the idea of space pyramid. The experimental results show that the network detection error rate decreases after the addition of SPP module, and after overcoming the original deficiency of the YOLOv3, the target accuracy of detection can be further improved compared with the modification of GIOU loss function only.

-

-

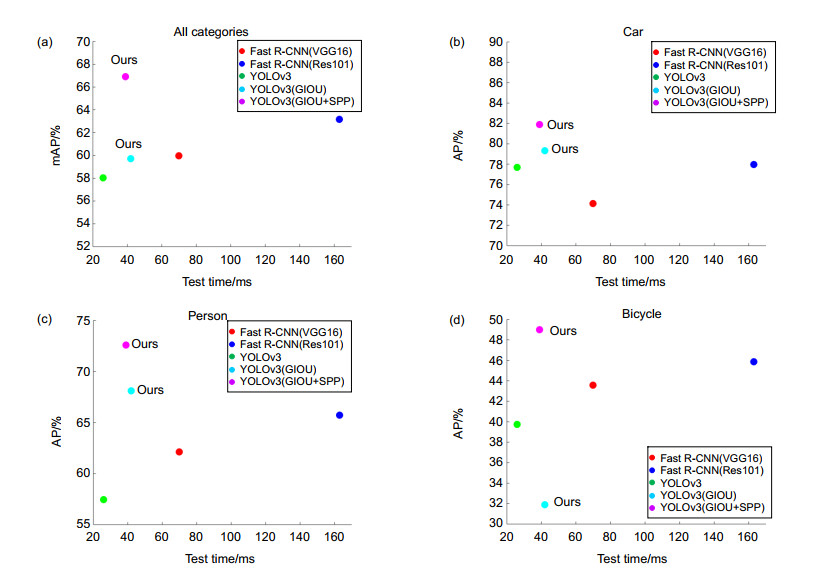

图 5 (a) 不同网络的所有类别检测速度和精度的结果;(b)不同网络的汽车检测速度和精度的结果;(c)不同网络的人检测速度和精度的结果;(d)不同网络的自行车检测速度和精度的结果

Figure 5. (a) The results of detection speed and accuracy of all categories of different networks; (b) The results of vehicle detection speed and accuracy of different networks; (c) The results of human detection speed and accuracy of different networks; (d) The results of bicycle detection speed and accuracy on different networks

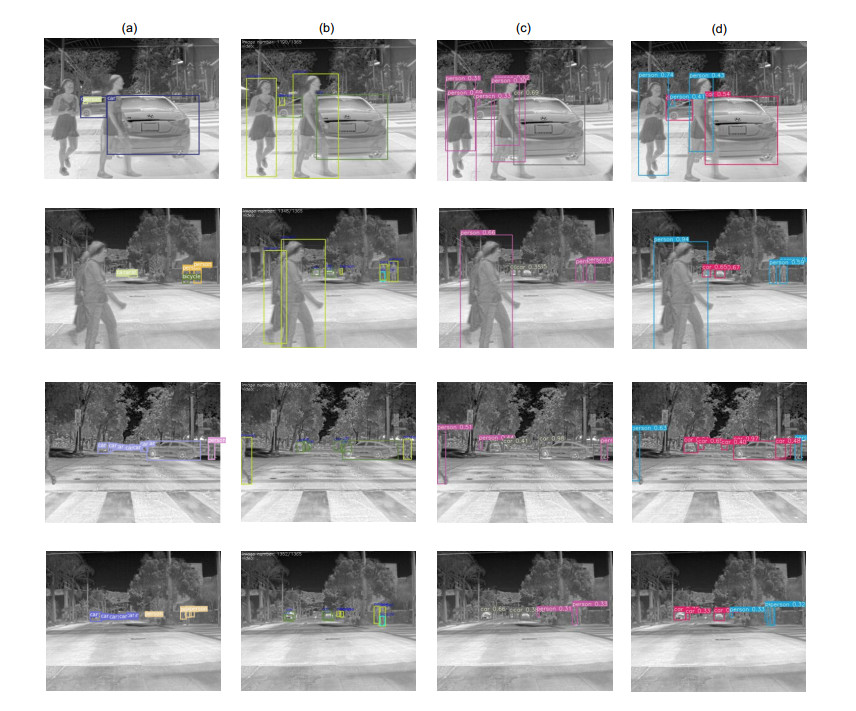

图 6 (a) YOLOv3网络检测结果;(b)真实值;(c)使用GIOU损失函数的YOLOv3网络检测结果;(d)使用GIOU损失函数并添加SPP模块的YOLOv3网络的检测结果

Figure 6. (a) The network detection result of YOLOv3; (b) Ground truth; (c) The YOLOv3 network detection results using GIOU loss function; (d) Detection results for YOLOv3 network that use GIOU loss function and SPP modules

表 1 使用ImageNet和MS COCO数据集权重训练FLIR红外数据集

Table 1. Use ImageNet and MS COCO data set to train FLIR infrared data set

Dataset mAP/% ImageNet 49.86 MS COCO 58.02 表 2 不同的预处理方法输入到YOLOv3网络进行训练的检测结果

Table 2. Different pretreatment methods were input into the YOLOv3 network for training

Pretreatment methods mAP/% Original image 58.02 Inversion 60.71 Histogram equalization 57.26 De-noising + sharpen 57.42 表 3 不同框架对于FLIR数据集的检测结果。Faster R-CNN IOU阈值为0.3,YOLOv3 IOU阈值为0.6

Table 3. Detection results of FILR dataset by different frameworks. The Faster R-CNN IOU threshold is 0.3, and the YOLOv3 IOU threshold is 0.6

Detention method AP/% mAP/% Test time/s car person bicycle Faster R-CNN(VGG16) 74.15 62.14 43.58 59.96 0.070 Faster R-CNN(Res101) 77.96 65.72 45.86 63.18 0.163 YOLOv3 77.69 57.47 39.74 58.02 0.026 Ours(YOLOv3+GIOU) 79.3 68.10 31.90 59.70 0.042 Ours(YOLOv3+GIOU+SPP) 81.90 72.60 49.00 66.80 0.039 -

[1] Hou Y L, Song Y Y, Hao X L, et al. Multispectral pedestrian detection based on deep convolutional neural networks[C]//2017 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), 2018.

[2] 朱大炜.基于深度学习的红外图像飞机目标检测方法[D].西安: 西安电子科技大学, 2018.

Zhu D W. Infrared image plane target detection method based on deep learning[D]. Xi'an: Xidian University, 2018.

[3] Herrmann C, Ruf M, Beyerer J. CNN-based thermal infrared person detection by domain adaptation[J]. Proceedings of SPIE, 2018, 10643: 1064308. http://www.researchgate.net/publication/324935012_CNN-based_thermal_infrared_person_detection_by_domain_adaptation

[4] 侯志强, 刘晓义, 余旺盛, 等.基于双阈值-非极大值抑制的Faster R-CNN改进算法[J].光电工程, 2019, 46(12): 190159. doi: 10.12086/oee.2019.190159

Hou Z Q, Liu X Y, Yu W S, et al. Improved algorithm of faster R-CNN based on double threshold-non-maximum suppression[J]. Opto-Electronic Engineering, 2019, 46(12): 190159. doi: 10.12086/oee.2019.190159

[5] Liu W, Anguelov D, Erhan D, et al. SSD: single shot MultiBox detector[C]//Proceedings of the 14th European Conference on Computer Vision, 2016, 9905: 21–37.

[6] Fu C Y, Liu W, Ranga A, et al. DSSD: deconvolutional single shot detector[Z]. arXiv: 1701.06659[cs.CV], 2017.

[7] Redmon J, Farhadi A. YOLOv3: an incremental improvement[Z]. arXiv: 1804.02767[cs.CV], 2018.

[8] Bochkovskiy A, Wang C Y, Liao H Y M. YOLOv4: optimal speed and accuracy of object detection[Z]. arXiv: 2004.10934[cs.CV], 2020.

[9] 金瑶, 张锐, 尹东.城市道路视频中小像素目标检测[J].光电工程, 2019, 46(9): 190053. doi: 10.12086/oee.2019.190053

Jin Y, Zhang R, Yin D. Object detection for small pixel in urban roads videos[J]. Opto-Electronic Engineering, 2019, 46(9): 190053. doi: 10.12086/oee.2019.190053

[10] Li Z M, Peng C, Yu G, et al. DetNet: a backbone network for object detection[Z]. arXiv: 1804.06215[cs.CV], 2018.

[11] Liu S T, Huang D, Wang Y H. Receptive field block net for accurate and fast object detection[Z]. arXiv: 1711.07767[cs.CV], 2017.

[12] 赵春梅, 陈忠碧, 张建林.基于深度学习的飞机目标跟踪应用研究[J].光电工程, 2019, 46(9): 180261. doi: 10.12086/oee.2019.180261

Zhao C M, Chen Z B, Zhang J L. Application of aircraft target tracking based on deep learning[J]. Opto-Electronic Engineering, 2019, 46(9): 180261. doi: 10.12086/oee.2019.180261

[13] 石超, 陈恩庆, 齐林.红外视频中的舰船检测[J].光电工程, 2018, 45(6): 170748. doi: 10.12086/oee.2018.170748

Shi C, Chen E Q, Qi L. Ship detection from infrared video[J]. Opto-Electronic Engineering, 2018, 45(6): 170748. doi: 10.12086/oee.2018.170748

[14] Yu J H, Jiang Y N, Wang Z Y, et al. UnitBox: An advanced object detection network[C]//Proceedings of the 24th ACM International Conference on Multimedia, 2016.

[15] Rezatofighi H, Tsoi N, Gwak J Y, et al. Generalized intersection over union: a metric and a loss for bounding box regression[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: