Design and implementation of DRFCN in-depth network for military target identification

-

摘要:

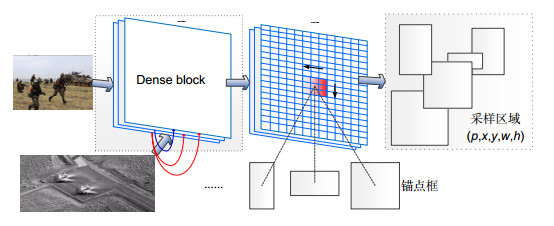

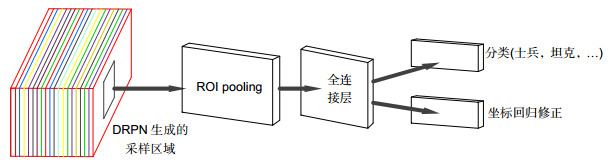

自动目标识别(ATR)技术一直是军事领域中急需解决的重点和难点。本文设计并实现了一种新的面向军事目标识别应用的DRFCN深度网络。首先,在DRPN部分通过卷积模块稠密连接的方式,复用深度网络模型中每一层的特征,实现高质量的目标采样区域的提取;其次,在DFCN部分通过融合高低层次特征图语义特征信息,实现采样区域目标类别和位置信息的预测;最后,给出了DRFCN深度网络模型结构以及参数训练方法。与此同时,进一步对DRFCN算法开展了实验分析与讨论:1)基于PASCAL VOC数据集进行对比实验,结果表明,由于采用卷积模块稠密连接的方法,在目标识别平均准确率、实时性和深度网络模型大小方面,DRFCN算法均明显优于已有基于深度学习的目标识别算法;同时,验证了DRFCN算法可以有效解决梯度弥散和梯度膨胀问题。2)利用自建军事目标数据集进行实验,结果表明,DRFCN算法在准确率和实时性上满足军事目标识别任务。

-

关键词:

- 深度学习 /

- 目标识别 /

- PASCAL VOC数据集 /

- 军事目标

Abstract:Automatic target recognition (ATR) technology has always been the key and difficult point in the military field. This paper designs and implements a new DRFCN in-depth network for military target identification. Firstly, the part of DRPN is densely connected by the convolution module to reuse the features of each layer in the deep network model to extract the high quality goals of sampling area; Secondly, in the DFCN part, we fuse the information of the semantic features of the high and low level feature maps to realize the prediction of target area and location information in the sampling area; Finally, the deep network model structure and the parameter training method of DRFCN are given. Further, we conduct experimental analysis and discussion on the DRFCN algorithm: 1) Based on the PASCAL VOC dataset for comparison experiments, the results show that DRFCN algorithm is obviously superior to the existing algorithm in terms of average accuracy, real-time and model size because of the convolution module dense connection method. At the same time, it is verified that the DRFCN algorithm can effectively solve the problem of gradient dispersion and gradient expansion. 2) Using the self-built military target dataset for experiments, the results show that the DRFCN algorithm implements the military target recognition task in terms of accuracy and real-time.

-

Key words:

- deep learning /

- target recognition /

- PASCAL VOC dataset /

- military target

-

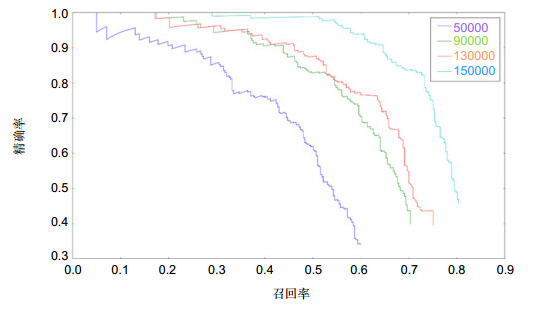

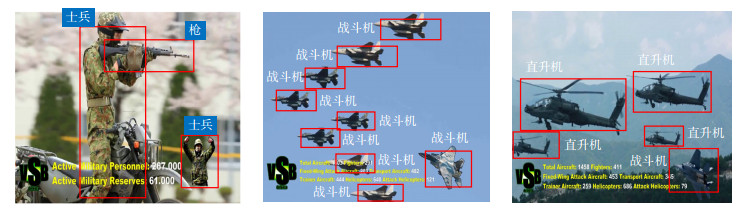

Overview: Automatic target recognition (ATR) technology has always been the key and difficult point in the military field. Photoelectric detection is one of the key detection methods in modern early warning and detection information network. In actual combat, massive images and video data of different types, timings and resolutions can be obtained by optoelectronic devices. For these massive infrared images or visible light images, this paper designs and implements a DRFCN in-depth network for military target identification applications. Firstly, the DRFCN algorithm inputs images and the part of DRPN is densely connected by the convolution module to reuse the features of each layer in the deep network model to extract the high quality goals of sampling region; Secondly, in the DFCN part, we fuse the information of the semantic features of the high and low level feature maps to realize the prediction of target area and location information in the sampling area; Finally, the deep network model structure and the parameter training method of DRFCN are given. In the experimental analysis and discussion part: 1) Through a large number of experiments, we draw various types of LOSS curves and P-R curves to prove the convergence of the DRFCN algorithm. 2) On the pre-training classification model based on the ImageNet dataset, the DRFCN algorithm achieved 93.1% Top-5 accuracy, 76.1% Top-1 accuracy and the model size was 112.3 MB. 3) Based on the PASCAL VOC dataset, the accuracy of DRFCN algorithm is 75.3%, which is 5.4% higher than that of VGG16 network. The test time of the DRFCN algorithm is 0.12 s. Compared to VGG16, the test time was reduced by 0.3 s. The DRFCN algorithm has advantages over the existing algorithm. Therefore, it is superior to the existing depth learning based target recognition algorithm. At the same time, it is verified that the DRFCN algorithm can effectively solve the vanishing gradient and exploding gradient. 4) Using the self-made military target data set for experiments, the DRFCN algorithm has an accuracy rate of 77.5% and a test time of 0.20 s. Compared to the PASCAL VOC2007 dataset algorithm, the accuracy is increased by 2.2%. The time is reduced by 80 milliseconds. The results show that the DRFCN algorithm achieves the military target recognition task in accuracy and real-time. In summary, compared with the existing deep learning network, the comprehensive performance of the DRFCN algorithm is better. The DRFCN algorithm improves the recognition average accuracy, reduces the depth network model and effectively solves the vanishing gradient and exploding gradient.

-

-

表 1 DRFCN16目标识别算法模型结构

Table 1. DRFCN16 object recognition algorithm model structure

DRFCN层 输出尺寸(w×h) DRFCN16 卷积 250×500 7×7卷积,步长2,填充3 池化 126×251 3×3池化,步长2,填充1 稠密连接卷积层 126×251 多层卷积 卷积 63×26 5×5卷积,步长2,填充2 池化 32×64 3×3池化,步长2,填充1 稠密连接卷积层 32×64 多层卷积 卷积 32×64 3×3卷积,步长1,填充1 池化 32×64 3×3池化,步长1,填充1 稠密连接卷积层 32×64 多层卷积 卷积 32×64 3×3卷积,步长1,填充1 ROI 7×7 ROI池化层 FC 21 连接分类器输出置信度 表 2 DRFCN在ImageNet数据集上预训练模型大小及分类准确率比较

Table 2. Comparison of pre-training model sizes for DRFCN on ImageNet datasets

算法模型 Top-1/% Top-5/% 模型大小/MB DRFCN5 74.9 90.1 50.8 DRFCN16 76.1 93.1 112.3 VGG16 76.0 93.2 548.3 ResNet-18 70.4 89.6 44.6 ResNet-101 80.1 94.0 203.5 表 3 DRFCN算法和前沿目标识别算法模型在VOC2007数据集上的比较

Table 3. Comparison of DRFCN algorithm and frontier target recognition algorithm model on VOC2007 dataset

算法模型 每幅图像训练时间/s 每幅图像测试时间/s mAP/% DRFCN5 0.21 0.09 72.1 DRFCN16 0.28 0.12 75.3 VGG16 1.20 0.42 69.9 RFCN-101 0.45 0.17 76.6 表 4 DRFCN16算法在自建的军事目标数据集上的平均准确率和测试时间

Table 4. The average accuracy and test time of DRFCN16 algorithm on the data set of the self-built military target

每幅图像测试时间/s mAP/% 战斗机 坦克 直升机 军舰 枪 导弹 加农炮 潜艇 士兵 0.20 77.5 90.7 77.2 91.6 78.7 69.1 74.2 68.8 67.7 79.5 -

[1] Nair D, Aggarwal J K. Bayesian recognition of targets by parts in second generation forward looking infrared images[J]. Image and Vision Computing, 2000, 18(10): 849-864. doi: 10.1016/S0262-8856(99)00084-0

[2] Crevier D, Lepage R. Knowledge-Based image understanding systems: A survey[J]. Computer Vision and Image Understanding, 1997, 67(2): 161-185. http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ025911245/

[3] Bartlett T A. Simplified IR signature prediction for model-based ATR[J]. Proceedings of SPIE, 1993, 1957: 111-121. doi: 10.1117/12.161430

[4] Watkins W R, CuQlock-Knopp V G, Jordan J B, et al. Sensor fusion: a preattentive vision approach[J]. Proceedings of SPIE, 2000, 4029: 59-67. doi: 10.1117/12.392556

[5] Rogers S K, Colombi J M, Martin C E, et al. Neural networks for automatic target recognition[J]. Neural Networks, 1995, 8(7-8): 1153-1184. doi: 10.1016/0893-6080(95)00050-X

[6] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks[C]//Proceedings of the 25th International Conference on Neural Information Processing Systems, 2012: 1097-1105.

[7] Dai J F, Li Y, He K M, et al. R-FCN: object detection via region-based fully convolutional networks[C]//30th Conference on Neural Information Processing Systems, 2016: 379-387.

[8] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 779-788.

[9] Liu W, Anguelov D, Erhan D, et al. SSD: single shot MultiBox detector[C]//14th European Conference on Computer Vision, 2016: 21-37.

[10] Girshick R, Donahue J, Darrell T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014: 580-587.

[11] He K M, Zhang X Y, Ren S Q, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1904-1916. doi: 10.1109/TPAMI.2015.2389824

[12] Grauman K, Darrell T. The pyramid match kernel: discriminative classification with sets of image features[C]//Tenth IEEE International Conference on Computer Vision, 2005, 2: 1458-1465.

[13] Girshick R. Fast R-CNN[C]//Proceedings of the 2015 IEEE International Conference on Computer Vision, 2015: 1440-1448.

[14] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031

[15] Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation[C]//IEEE Conference on Computer Vision and Pattern Recognition, 2015: 3431-3440.

10.1109/TPAMI.2016.2572683 [16] 王正来, 黄敏, 朱启兵, 等.基于深度卷积神经网络的运动目标光流检测方法[J].光电工程, 2018, 45(8): 180027. CNKI:SUN:GDGC.0.2018-08-006

Wang Z L, Huang M, Zhu Q B, et al. The optical flow detection method of moving target using deep convolution neural network[J]. Opto-Electronic Engineering, 2018, 45(8): 180027. CNKI:SUN:GDGC.0.2018-08-006

[17] Bengio Y, Simard P, Frasconi P. Learning long-term dependencies with gradient descent is difficult[J]. IEEE Transactions on Neural Networks, 1994, 5(2): 157-166. doi: 10.1109/72.279181

[18] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 770-778.

10.1109/CVPR.2016.90 [19] Srivastava R K, Greff K, Schmidhuber J. Training very deep networks[C]//Neural Information Processing Systems, 2015: 2377-2385.

[20] Huang G, Liu Z, Van Der Maaten L, et al. Densely connected convolutional networks[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 2261-2269.

10.1109/CVPR.2017.243 [21] Szegedy C, Liu W, Jia Y Q, et al. Going deeper with convolutions[C]//2015 IEEE Conference on Computer Vision and Pattern Recognition, 2015: 1-9.

10.1109/CVPR.2015.7298594 [22] Zagoruyko S, Komodakis N. Wide residual networks[C]//Proceedings of the British Machine Vision Conference, 2016, 87(7): 1-12.

10.5244/C.30.87 [23] Xie S N, Girshick R, Dollar P, et al. Aggregated residual transformations for deep neural networks[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 5987-5995.

[24] 谷雨, 徐英.基于随机卷积特征和集成超限学习机的快速SAR目标识别[J].光电工程, 2018, 45(1): 170432. doi: 10.3788/gzxb20114002.0289

Gu Y, Xu Y. Fast SAR target recognition based on random convolution features and ensemble extreme learning machines[J]. Opto-Electronic Engineering, 2018, 45(1): 170432. doi: 10.3788/gzxb20114002.0289

[25] Everingham M, Eslami S M A, Van Gool L, et al. The pascal visual object classes challenge: a retrospective[J]. International Journal of Computer Vision, 2015, 111(1): 98-136. doi: 10.1007/s11263-014-0733-5

[26] Deng J, Dong W, Socher R, et al. ImageNet: A large-scale hierarchical image database[C]//2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009: 248-255.

10.1109/CVPR.2009.5206848 -

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: