An anisotropic edge total generalized variation energy super-resolution based on fast l1-norm dictionary edge representations

-

摘要:

针对光学相机成像分辨率低、噪声干扰严重等问题,本文提出一种能有效去噪的高精度超分辨方法—基于快速l1-范数稀疏表示和二阶广义全变分(TGV)的超分辨方法。首先利用各向异性扩散张量(ADT)作为边缘高频信息,通过快速l1-范数稀疏表示方法建立LR图像和相对应的高频信息ADT的字典集;其次将字典学习到的ADT边缘信息和TGV模型组合成新的规则项;最后利用新的规则项建立超分辨代价函数,并利用图像增强后处理方法对整幅图像进行优化。结果表明:算法对仿真数据和SO12233靶标数据具有较高的可行性和鲁棒性,能有效去除噪声等异常点,获得高质量清晰图像,同时与其他经典算法相比,所提算法超分辨的峰值信噪比和结构相似度均有所增大。

Abstract:

Abstract:For camera-based imaging, low resolution and noise outliers are the major challenges. Here, we propose a novel super-resolution method-total generalized variation (TGV) super-resolution based on fast l1-norm dictionary edge representations. First, anisotropic diffusion tensor (ADT) is utilized as high frequency edge information. The fast l1-norm dictionary representation method is used to create dictionaries of LR image and the corresponding high frequency edge information. This method can quickly build dictionaries on the same database, and avoid the influence of outliers. Then we combine the edge information ADT and TGV model as the new regularization function. Finally, the super-resolution cost function is established. The results show that the algorithm has high feasibility and robustness to simulation data and SO12233 target data. It can effectively remove noise outliers and obtain high-quality clear images. Compared with other classical algorithms, the proposed algorithm can obtain higher PSNR and SSIM values.

-

Overview:Optical cameras of remote sensing, aviation and other reconnaissance equipment are taken as the application background. Aiming at the problems of low resolution imaging and serious noise interference, a high-precision super-resolution method with strong robustness to noise is studied. In this paper, a novel super-resolution method-total generalized variation (TGV) super-resolution based on fast l1-norm dictionary edge representations is proposed. The proposed method is analyzed and compared with the conventional methods through several experiments. On the whole, this method is superior to other classical methods. It has high feasibility and robustness to simulation data and SO12233 target data. Furthermore, it can effectively remove noise outliers and obtain high-quality reconstruction image.

Detector is an important part of optical camera, and its discrete sampling and optical system speckle are the main factors affecting its imaging resolution. Firstly, the detector sampling is mainly reflected in the fact that in order to meet the SNR requirements, the Nyquist frequency of the imaging system should be lower than the cutoff frequency of the optical system, but it will cause frequency aliasing. Secondly, the speckle of optical system leads to point spread effect due to diffraction. Both of them will lead to low resolution and poor quality of camera imaging, which will affect reconnaissance. At the same time, the noise introduced in the acquisition of target scene by optical system is also an important factor affecting the imaging quality.

In order to solve the above problems, the proposed method combines sparse representation with generalized total variation for targeted improvement and innovation. First, anisotropic diffusion tensor (ADT) is utilized as high frequency edge information. The fast l1-norm dictionary representation method is used to create dictionaries of LR image and the corresponding high frequency edge information. This method can quickly build dictionaries on the same database, and avoid the influence of outliers. Then we combine the edge information ADT and TGV model as the new regularization function. Finally, the super-resolution cost function is established. In the experimental part, simulation data and real SO12233 target data are used to prove the effectiveness of the algorithm in this paper, which can not only remove LR noise outliers, but also produce more accurate reconstructions than those produced by other general super-resolution algorithms. Meanwhile, the proposed algorithm can obtain higher PSNR and SSIM values.

-

-

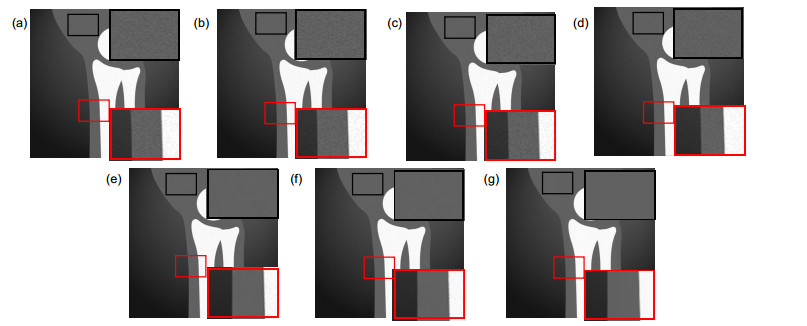

图 2 超分辨结果图。(a) Bicubic方法;(b) BP-JDL方法;(c) SRCNN方法;(d) VDSR方法;(e) BP-JDL方法+去噪方法;(f) SRCNN方法+去噪方法;(g)本文算法

Figure 2. Simulated reconstructed results based on (a) Bicubic method, (b) BP-JDL method, (c) SRCNN method, (d) VDSR method, (e) the combination of BP-JDL and denoising methods, (f) the combination of SRCNN and denoising methods, as well as (g) the proposed method

图 3 超分辨结果图。(a) Bicubic方法;(b) BP-JDL方法;(c) SRCNN方法;(d) VDSR方法;(e) BP-JDL方法+去噪方法;(f) SRCNN方法+去噪方法;(g)本文算法

Figure 3. Simulated reconstructed results based on (a) Bicubic method, (b) BP-JDL method, (c) SRCNN method, (d) VDSR method, (e) the combination of BP-JDL and denoising methods, (f) the combination of SRCNN and denoising methods, as well as (g) the proposed method

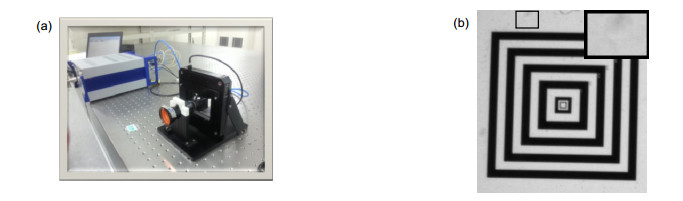

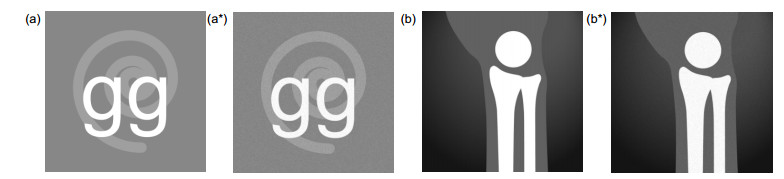

图 4 实景图像。(a)原始HR建筑图;(a*)相对应的LR噪声图像;(b)原始HR蔬菜图像;(b*)相对应的LR噪声图像;(c)原始HR字母图;(c*)相对应的LR噪声图像;(d)原始HR蝴蝶图;(d*)相对应的LR噪声图像

Figure 4. The real scene. (a) The HR castle image and (a*) its LR image with noise outliers; (b) The HR pepper image and (b*) its LR image with noise outliers; (c) The HR letter image and (c*) its LR image with noise outliers; (d) The HR butterfly image and (d*) its LR image with noise outliers

图 5 建筑图的超分辨重构结果。(a) Bicubic方法;(b) BP-JDL方法;(c) SRCNN方法;(d) VDSR方法;(e) BP-JDL方法+去噪方法;(f) SRCNN方法+去噪方法;(g)本文算法

Figure 5. Reconstructed results of castle based on (a) Bicubic method, (b) BP-JDL method, (c) SRCNN method, (d) VDSR method, (e) the combination of BP-JDL and denoising methods, (f) the combination of SRCNN and denoising methods, as well as (g) the proposed method

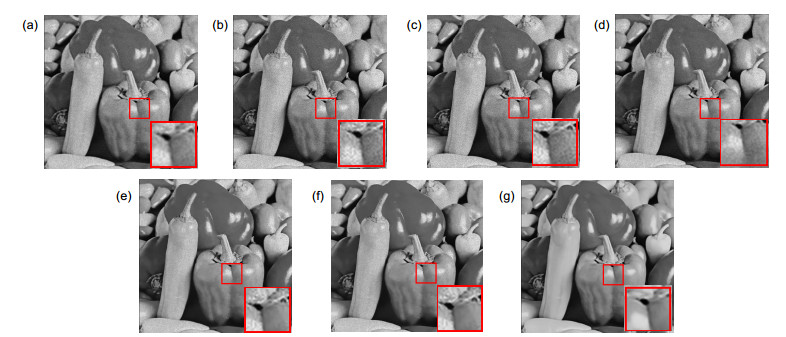

图 6 蔬菜图的超分辨重构结果。(a) Bicubic方法;(b) BP-JDL方法;(c) SRCNN方法;(d) VDSR方法;(e) BP-JDL方法+去噪方法;(f) SRCNN方法+去噪方法;(g)本文算法

Figure 6. Reconstructed results of pepper based on (a) Bicubic method, (b) BP-JDL method, (c) SRCNN method, (d) VDSR method, (e) the combination of BP-JDL and denoising methods, (f) the combination of SRCNN and denoising methods, as well as (g) the proposed method

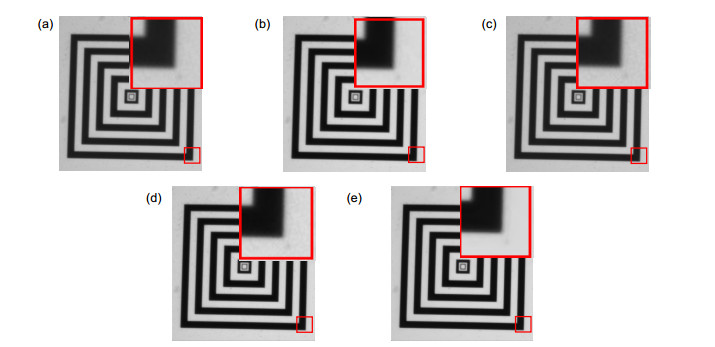

图 7 字母图的超分辨重构结果。(a) Bicubic方法;(b) BP-JDL方法;(c) SRCNN方法;(d) VDSR方法;(e) BP-JDL方法+去噪方法;(f) SRCNN方法+去噪方法;(g)本文算法

Figure 7. Reconstructed results of letters based on (a) Bicubic method, (b) BP-JDL method, (c) SRCNN method, (d) VDSR method, (e) the combination of BP-JDL and denoising methods, (f) the combination of SRCNN and denoising methods, as well as (g) the proposed method

图 8 蝴蝶图的超分辨重构结果。(a) Bicubic方法;(b) BP-JDL方法;(c) SRCNN方法;(d) VDSR方法;(e) BP-JDL方法+去噪方法;(f) SRCNN方法+去噪方法;(g)本文算法

Figure 8. Reconstructed results of butterfly based on (a) Bicubic method, (b) BP-JDL method, (c) SRCNN method, (d) VDSR method, (e) the combination of BP-JDL and denoising methods, (f) the combination of SRCNN and denoising methods, as well as (g) the proposed method

表 1 不同方法的PSNR和SSIM的数值

Table 1. The results of PSNR and SSIM with different method

BP-JDL方法+去噪方法 SRCNN方法+去噪方法 本文算法 PSNR/SSIM PSNR/SSIM PSNR/SSIM 轮廓图1 36.8538/0.8768 37.0641/0.8799 37.8333/0.8950 轮廓图2 36.3327/0.8800 36.8229/0.8827 37.7749/0.8966 表 2 不同方法的PSNR和SSIM的数值

Table 2. The results of PSNR and SSIM with different method

BP-JDL方法+去噪方法 SRCNN方法+去噪方法 本文算法 PSNR/SSIM PSNR/SSIM PSNR/SSIM 建筑图 24.4163/0.7115 24.2135/0.7220 24.8924/0.7413 蔬菜图 28.7895/0.8900 30.0180/0.8861 30.8413/0.8974 字母图 26.0446/0.8649 26.6384/0.8755 27.6898/0.8873 蝴蝶图 31.1677/0.8516 31.6664/0.8723 32.7636/0.8919 -

[1] Maalouf A, Larabi M C. Colour image super-resolution using geometric grouplets[J]. IET Image Processing, 2012, 6(2): 168-180. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=4a1e5024b5d54ce00351ec9e806d2a03

[2] 王飞, 王伟, 邱智亮.一种深度级联网络结构的单帧超分辨重建算法[J].光电工程, 2018, 45(7): 170729. doi: 10.12086/oee.2018.170729

Wang F, Wang W, Qiu Z L. A single super-resolution method via deep cascade network[J]. Opto-Electronic Engineering, 2018, 45(7): 170729. doi: 10.12086/oee.2018.170729

[3] 孙超, 吕俊伟, 李健伟, 等.基于去卷积的快速图像超分辨率方法[J].光学学报, 2017, 37(12): 1210004. doi: 10.3788/AOS201737.1210004

Sun C, Lü J W, Li J W, et al. Method of rapid image super-resolution based on deconvolution[J]. Acta Optica Sinica, 2017, 37(12): 1210004. doi: 10.3788/AOS201737.1210004

[4] 汪荣贵, 汪庆辉, 杨娟, 等.融合特征分类和独立字典训练的超分辨率重建[J].光电工程, 2018, 45(1): 170542. doi: 10.12086/oee.2018.170542

Wang R G, Wang Q H, Yang J, et al. Image super-resolution reconstruction by fusing feature classification and independent dictionary training[J]. Opto-Electronic Engineering, 2018, 45(1): 170542. doi: 10.12086/oee.2018.170542

[5] Ng M K, Shen H F, Lam E Y, et al. A total variation regularization based super-resolution reconstruction algorithm for digital video[J]. EURASIP Journal on Advances in Signal Processing, 2007, 2007: 074585. doi: 10.1155/2007/74585

[6] Marquina A, Osher S J. Image super-resolution by TV-regularization and Bregman iteration[J]. Journal of Scientific Computing, 2008, 37(3): 367-382. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=13c7a3181e9795353c6bc22b463ae536

[7] Li X L, Hu Y T, Gao X B, et al. A multi-frame image super-resolution method[J]. Signal Processing, 2010, 90(2): 405-414. doi: 10.1016/j.sigpro.2009.05.028

[8] Farsiu S, Robinson M D, Elad M, et al. Fast and robust multiframe super resolution[J]. IEEE Transactions on Image Processing, 2004, 13(10): 1327-1344. doi: 10.1109/TIP.2004.834669

[9] Yue L W, Shen H F, Yuan Q Q, et al. A locally adaptive L1-L2 norm for multi-frame super-resolution of images with mixed noise and outliers[J]. Signal Processing, 2014, 105: 156-174. doi: 10.1016/j.sigpro.2014.04.031

[10] Wang L F, Xiang S M, Meng G F, et al. Edge-directed single-image super-resolution via adaptive gradient magnitude self-interpolation[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2013, 23(8): 1289-1299. doi: 10.1109/TCSVT.2013.2240915

[11] Sun J, Sun J, Xu Z B, et al. Gradient profile prior and its applications in image super-resolution and enhancement[J]. IEEE Transactions on Image Processing, 2011, 20(6): 1529-1542. doi: 10.1109/TIP.2010.2095871

[12] Feng W S, Lei H. Single-image super-resolution with total generalised variation and Shearlet regularisations[J]. IET Image Processing, 2014, 8(12): 833-845. doi: 10.1049/iet-ipr.2013.0503

[13] Ma Z Y, Liao R J, Tao X, et al. Handling motion blur in multi-frame super-resolution[C]//Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition, 2015: 5224-5232.

[14] Yang J C, Wright J, Huang T, et al. Image super-resolution as sparse representation of raw image patches[C]//Proceedings of 2008 IEEE Conference on Computer Vision and Pattern Recognition, 2008: 1-8.

[15] Yang J C, Wright J, Huang T S, et al. Image super-resolution via sparse representation[J]. IEEE Transactions on Image Processing, 2010, 19(11): 2861-2873. doi: 10.1109/TIP.2010.2050625

[16] He L, Qi H R, Zaretzki R. Beta process joint dictionary learning for coupled feature spaces with application to single image super-resolution[C]//Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition, 2013: 345-352.

[17] Liu W R, Li S T. Sparse representation with morphologic regularizations for single image super-resolution[J]. Signal Processing, 2014, 98: 410-422. doi: 10.1016/j.sigpro.2013.11.032

[18] Lu C W, Shi J P, Jia J Y. Online robust dictionary learning[C]//Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition, 2013: 415-422.

[19] Ferstl D, Reinbacher C, Ranftl R, et al. Image guided depth upsampling using anisotropic total generalized variation[C]//Proceedings of 2013 IEEE International Conference on Computer Vision, 2013: 993-1000.

[20] Ferstl D, Ruther M, Bischof H. Variational depth superresolution using example-based edge representations[C]//Proceedings of 2015 IEEE International Conference on Computer Vision, 2015: 513-521.

[21] Dong C, Loy C C, He K M, et al. Image super-resolution using deep convolutional networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(2): 295-307. doi: 10.1109/TPAMI.2015.2439281

[22] Bredies K, Kunisch K, Pock T. Total generalized variation[J]. SIAM Journal on Imaging Sciences, 2010, 3(3): 492-526. doi: 10.1137/090769521

[23] Benning M, Brune C, Burger M, et al. Higher-order TV methods-enhancement via Bregman iteration[J]. Journal of Scientific Computing, 2013, 54(2-3): 269-310. doi: 10.1007/s10915-012-9650-3

[24] Bissantz N, Dümbgen L, Munk A, et al. Convergence analysis of generalized iteratively reweighted least squares algorithms on convex function spaces[J]. SIAM Journal on Optimization, 2009, 19(4): 1828-1845. doi: 10.1137/050639132

[25] Kim J, Lee J K, Lee K M. Accurate image super-resolution using very deep convolutional networks[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 1646-1654.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: