Visual identification and location algorithm for robot based on the multimodal information

-

摘要:

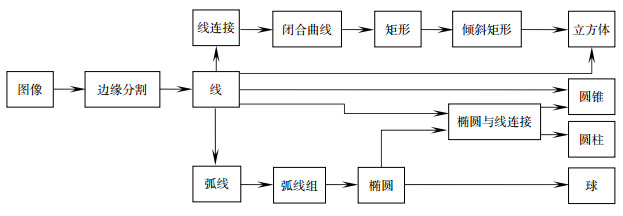

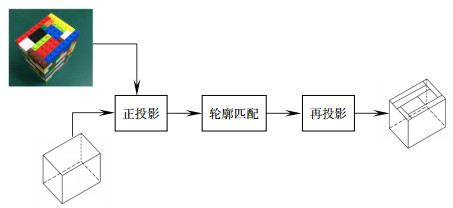

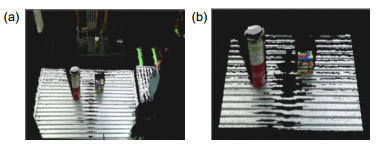

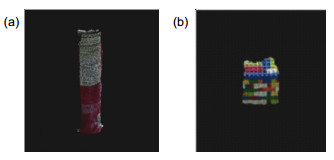

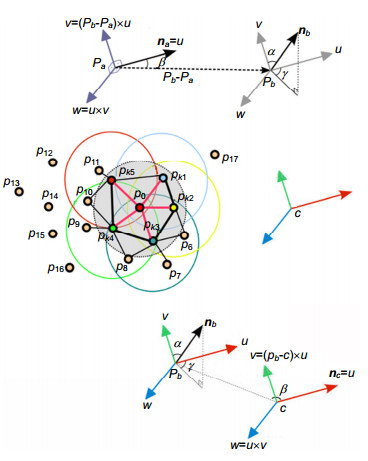

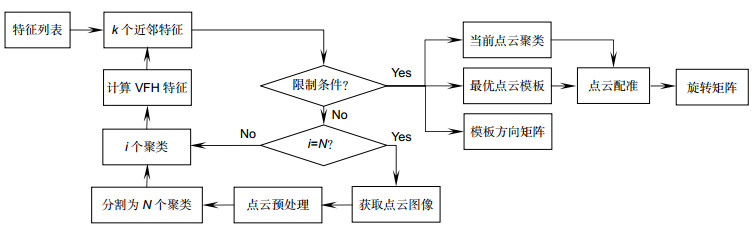

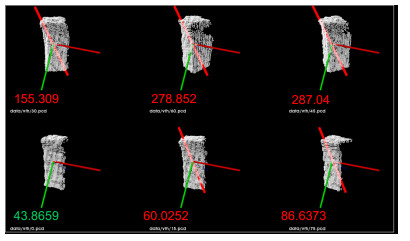

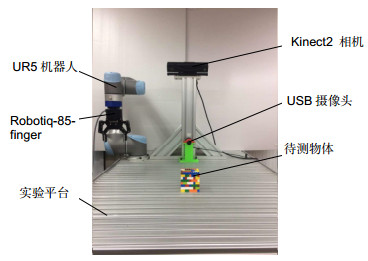

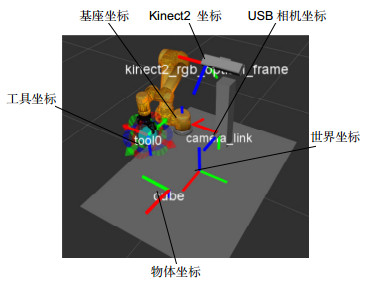

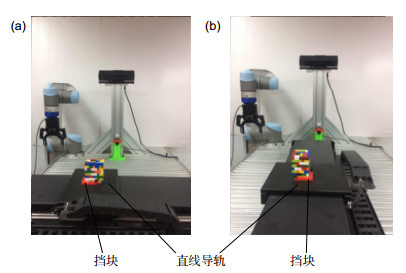

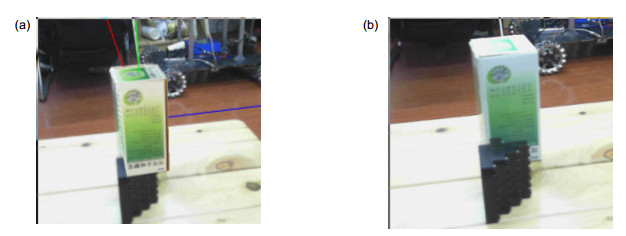

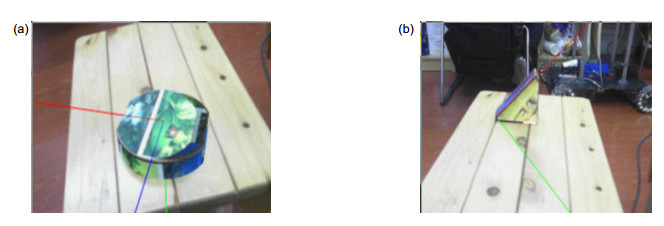

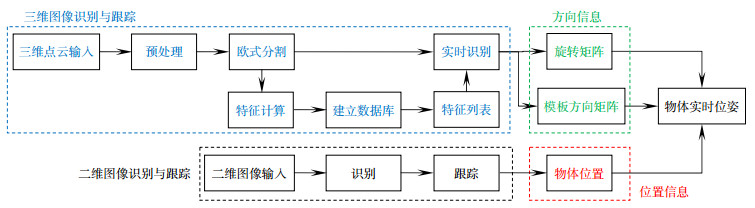

针对目前物体识别定位算法中,图像信息来源单一、处理过程复杂与定位误差大等问题,提出一种基于多模态信息的视觉识别与定位方法,通过提取二维图像和点云图像的多模态信息,实现对物体的识别与定位。先利用彩色相机获取目标的二维图像信息,通过轮廓检测与匹配处理进行轮廓识别,再提取图像SIFT特征进行定位跟踪,得到物体的位置信息;同时采用RGB-D相机获取目标的三维点云图像信息,经过预处理、欧式聚类分割、VFH特征计算、KD树搜索得到最佳模板,进行点云图像的识别,并经点云聚类配准获得物体方向信息。最后,利用上述二维图像和点云图像处理所得物体信息,完成对目标的识别与定位。通过机器臂抓取实验对本文方法的效果进行了验证,结果表明,采用二维图像和点云图像的多模态信息进行处理,能够有效对不同形状的目标物体进行识别与定位,与仅采用二维或点云单模态图像信息的处理方法相比,定位误差可减小54.8%,方向误差减少50.8%,具有较好的鲁棒性和准确性。

Abstract:To overcome the problem of a single image source, complex processing and inaccurate positioning, a visual identification and location algorithm based on multi-modal information is proposed, and the fusion processing is performed by extracting the multimodal information of the two-dimensional image and the point cloud image to realize object recognition and positioning. Firstly the target 2D image information is obtained by RGB camera. The contour is recognized through the contour detection and matching process. Then the image SIFT feature is extracted for location tracking and the position of the object is obtained. Meanwhile obtaining a point cloud image by RGB-D camera and the best model can be sorted through pre-processing, Euclidean cluster segmentation, computing VFH feature and KD-tree searching, identifying the point cloud image. Then the orientation is obtained by registering the point clouds. Finally, the two-dimensional images and point cloud image are used to process object information, complete the identification and positioning of the target. The effect of the method is verified by the robotic gripping experiment. The result shows that the multi-modal information of two-dimensional image and point cloud image can be used to identify and locate different target objects. Compared with the processing method using only two-dimensional or point cloud single-mode image information, the positioning error can be reduced to 50%, the robustness and accuracy are better.

-

Key words:

- 2D image /

- point cloud /

- multimodal /

- feature recognition and positioning /

- robot

-

Overview: In recent years, various kinds of robots have got studied and used with the development of the robotics technology. In industrial production, workers often need to complete a large number of handling and assembly work. Using robots instead of the workers to complete these tasks would help to increase the efficiency of factories and reduce the labor intensity. Sensing the position of objects is one of the key problems for the robots to complete the task of picking objects. Machine vision is an effective methods to solve this problem, and it is also one of the research hotspots in the robot field at present. Traditional methods are based on two-dimensional images. Because two-dimensional images can not meet the demand, researchers extend their vision to three-dimensional images. Recent years, neural networks have been applied comprehensively, and machine vision has developed rapidly. However, for the traditional research methods, most of the sources of image information are single and basically come from one type of image. As the scene is always rather complicated, the traditional methods are usually faced with the problems such as incomplete image information, large recognition error and low accuracy.

To overcome these problems, a visual identification and location algorithm based on multi-modal information is proposed, and the fusion process is performed by extracting the multimodal information of the two-dimensional image and the point cloud image to realize object recognition and positioning. Firstly, the target 2D image information is obtained by RGB camera. The contour is recognized through the contour detection and matching process. Then, the image SIFT feature is extracted for location tracking and the position of object is obtained. Meanwhile, in order to identify the point cloud image, a point cloud image is captured by RGB-D camera and the best model can be sorted through pre-processing, Euclidean cluster segmentation, computing VFH feature and KD-tree searching. Thus, the orientation can be obtained by registering the point clouds. Finally, use the two-dimensional images and point cloud image to process object information, and to complete the identification and positioning of the target. The effect of the method is verified by the robotic gripping experiment. The results show that the multi-modal information of two-dimensional image and point cloud images can be used to identify and locate different target objects. Compared with the processing method using only two-dimensional or point cloud single-mode image information, the positioning error can be reduced to 50%, and the robustness and accuracy can be improved effectively.

-

-

表 1 X轴方向结果

Table 1. The positioning results of X-axis

序号 实际物理坐标 计算物理坐标 定位误差 X/mm Xn/mm Yn/mm Zn/mm (Xn-Xn-1)-0.02 /mm (Yn-Yn-1)/mm (Zn-Zn-1)/mm 1 50.0 243.0 -10.6 163.0 2 70.0 265.5 -10.3 163.8 2.5 0.3 0.8 3 90.0 284.0 -9.9 165.6 -1.5 0.4 1.8 4 110.0 302.0 -8.7 166.2 2.0 1.2 0.6 5 130.0 322.5 -8.5 166.6 0.5 -0.2 0.4 6 150.0 343.0 -8.3 167.9 0.5 0.2 1.3 7 170.0 363.6 -7.8 168.9 0.6 0.5 1.0 8 190.0 382.0 -7.0 169.0 -1.4 0.8 0.1 9 210.0 404.0 -6.4 171.0 2.0 0.6 2.0 10 230.0 422.0 -6.0 172.0 2.0 0.4 1.0 11 250.0 442.0 -6.0 172.4 0.0 0.0 0.4 表 2 Y轴方向结果

Table 2. The positioning results of Y-axis

序号 实际物理坐标 计算物理坐标 定位误差 X/mm Xn/mm Yn/mm Zn/mm (Xn-Xn-1)/mm (Yn-Yn-1)-0.02/mm (Zn-Zn-1)/mm 1 0 397.8 -174.6 174.8 2 20.0 398.0 -154.9 174.2 0.2 -0.3 -0.6 3 40.0 396.9 -134.5 174.2 -1.1 0.4 0.0 4 60.0 397.7 -110.6 174.7 0.8 3.9 0.5 5 80.0 401.0 -91.9 174.3 3.3 -1.5 -0.4 6 100.0 396.0 -70.4 172.5 -5.0 1.5 -1.8 7 120.0 400.5 -50.2 173.2 4.5 0.2 0.7 8 140.0 400.6 -28.6 174.0 0.1 1.6 0.8 9 160.0 400.1 -9.3 172.1 -0.5 -0.7 -1.9 10 180.0 400.3 12.1 172.7 0.2 1.4 0.6 11 200.0 400.9 32.5 172.6 0.6 0.4 -0.1 表 3 旋转结果

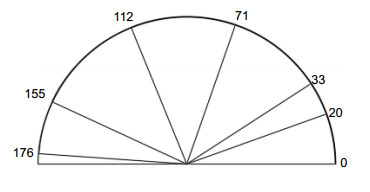

Table 3. The error result of rotation

序号 真实值/(°) 计算值/(°) 误差/(°) 1 20.0 16.8 3.8 2 33.0 38.6 5.6 3 71.0 66.4 4.6 4 112.0 116.5 4.5 5 155.0 160.2 5.2 6 176.0 171.4 4.6 表 4 不同算法的误差结果

Table 4. The error results of different algorithms

序号 二维图像+三维图像 二维图像 三维图像 位置/mm 方向/(°) 位置/mm 方向/(°) 位置/mm 方向/(°) 1 1.2 5.2 1.3 10.1 2.1 5.3 2 0.8 4.5 1.5 9.8 1.9 5.1 3 1.1 4.4 1.2 8.8 2.2 5.0 4 1.0 4.8 1.5 9.7 2.2 4.8 5 0.6 5.1 1.0 10.2 2.0 4.9 均值 0.94 4.8 1.3 9.72 2.08 5.02 表 5 不同形状物体的误差结果

Table 5. The error results of different objects

位置/mm 方向/(°) 乐高积木块 0.94 4.8 圆柱体 0.86 4.9 三棱柱 1.11 5.1 -

[1] Collet A, Srinivasa S S. Efficient multi-view object recognition and full pose estimation[C]//Proceeding of 2010 IEEE International Conference on Robotics and Automation, 2010: 2050-2055.

[2] Munoz E, Konishi Y, Murino V, et al. Fast 6D pose estimation for texture-less objects from a single RGB image[C]//Proceeding of 2016 IEEE International Conference on Robotics and Automation, 2016: 5623-5630.

[3] Zhu M L, Derpanis K G, Yang Y F, et al. Single Image 3D object detection and pose estimation for grasping[C]//Proceeding of 2014 IEEE International Conference on Robotics and Automation, 2014: 3936-3943.

[4] Bo L F, Lai K, Ren X F, et al. Object recognition with hierarchical kernel descriptors[C]//Proceedings of 2011 IEEE Conference on Computer Vision and Pattern Recognition, 2011: 1729-1736.

[5] Rusu R B, Blodow N, Beetz M. Fast point feature histograms (FPFH) for 3D registration[C]//Proceeding of 2009 IEEE International Conference on Robotics and Automation, 2009: 3212-3217.

[6] Braun M, Rao Q, Wang Y K, et al. Pose-RCNN: Joint object detection and pose estimation using 3D object proposals[C]// Proceeding of the 19th International Conference on Intelligent Transportation Systems (ITSC), 2016: 1546-1551.

[7] Pavlakos G, Zhou X W, Chan A, et al. 6-DoF object pose from semantic keypoints[C]//Proceeding of 2017 IEEE International Conference on Robotics and Automation (ICRA), 2017: 2011-2018.

[8] 段军, 高翔.基于统计滤波的自适应双阈值改进canny算子边缘检测算法[J].激光杂志, 2015, 36(1): 10-12. http://kns.cnki.net/KCMS/detail/detail.aspx?filename=jgzz201501004&dbname=CJFD&dbcode=CJFQ

Duan J, Gao X. Adaptive statistical filtering double threshholds based on improved canny operator edge detection algorithm[J]. Laser Journal, 2015, 36(1): 10-12. http://kns.cnki.net/KCMS/detail/detail.aspx?filename=jgzz201501004&dbname=CJFD&dbcode=CJFQ

[9] Sánchez-Torrubia M G, Torres-Blanc C, López-Martínez M A. Pathfinder: A visualization eMathTeacher for actively learning Dijkstra's algorithm[J]. Electronic Notes in Theoretical Computer Science, 2009, 224: 151-158. doi: 10.1016/j.entcs.2008.12.059

[10] Richtsfeld A, Vincze M. Basic object shape detection and tracking using perceptual organization[C]//Proceeding of 2009 IEEE International Conference on Advanced Robotics, 2009: 1-6.

[11] Mörwald T, Prankl J, Richtsfeld A, et al. BLORT -the blocks world robotic vision toolbox[Z]. 2010: 1-8.

[12] 戴静兰. 海量点云预处理算法研究[D]. 杭州: 浙江大学, 2006.

DAI J L. A research on preprocessing algorithms of mass point cloud[D]. Hangzhou: Zhejiang University, 2006.

http://cdmd.cnki.com.cn/Article/CDMD-10335-2006033284.htm [13] Rusu R B, Cousins S. 3D is here: Point Cloud Library (PCL)[C]//Proceeding of 2011 IEEE International Conference on Robotics and Automation, 2011: 1-4.

[14] Zhao T, Li H, Cai Q, et al. Point Cloud Segmentation Based on FPFH Features[C]// Proceedings of 2016 Chinese Intelligent Systems Conference. Singapore, Springer, 2016.

[15] Rusu R B, Bradski G, Thibaux R, et al. Fast 3D recognition and pose using the viewpoint feature histogram[C]//Proceeding of 2010 IEEE International Conference on Intelligent Robots and Systems, 2010: 2155-2162.

[16] 朱德海.点云库PCL学习教程[M].北京:北京航空航天大学出版社, 2012: 338-355.

Zhu D H. Point cloud library PCL[M]. Beijing: Beijing University of Aeronautics and Astronautics Press, 2012: 338-355.

[17] 黄风山, 秦亚敏, 任玉松.成捆圆钢机器人贴标系统图像识别方法[J].光电工程, 2016, 43(12): 168-174. doi: 10.3969/j.issn.1003-501X.2016.12.026 http://www.oee.ac.cn/CN/abstract/abstract1861.shtml

Huang F S, Qin Y M, Ren Y S. The image recognition method on round bales robot labeling system[J]. Opto-Electronic Engineering, 2016, 43(12): 168-174. doi: 10.3969/j.issn.1003-501X.2016.12.026 http://www.oee.ac.cn/CN/abstract/abstract1861.shtml

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: